Not so long ago, the idea of making a cinematic film from just a line of text sounded like a science fiction plot. But as autumn 2025 rolls in, Google has quietly rewritten that script — not just with a better AI, but with a vision of how humans and machines can co-create stories.

At the heart of this revolution is Veo 3.1, the latest evolution of Google’s generative video model. Its launch wasn’t flashy, but its impact is already echoing through the studios of indie creators, animation houses, and forward-thinking filmmakers. Because Veo 3.1 isn’t just another AI — it’s a filmmaker in code.

Act I: The Sound of a New Beginning

When the earlier versions of Veo hit the scene, they impressed with visuals — surreal dreamscapes, believable characters, and motion that flowed like water. But something was always missing: sound.

With Veo 3.1, that silence is finally broken.

For the first time, this model doesn’t just generate what you see — it generates what you hear. Ambient noise, synchronized dialogue, background music, the soft crunch of gravel beneath footsteps — all appear in perfect harmony with the visuals.

A quiet city street hums with life. A suspenseful scene builds with rising tension in the score. And the best part? It all comes directly from your prompt. No separate audio design required.

It’s the first time AI has begun to understand not just how to show a story, but how to tell one.

Act II: The Ingredients of Imagination

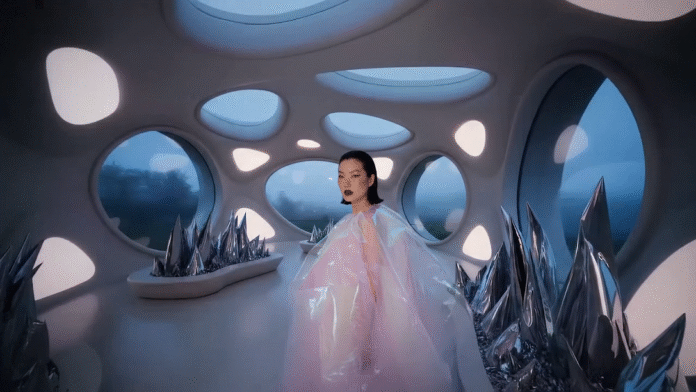

In a small studio apartment in Toronto, a young animator feeds three reference images into a tool called Flow — a stylish character sketch, a futuristic corridor, and a glowing artifact.

She clicks “Generate.”

Veo 3.1 responds instantly. The generated video doesn’t just guess; it remembers. Her character stays consistent across shots. The corridor’s lighting matches the reference. The artifact pulses with the same eerie glow she designed.

This feature — called “Ingredients to Video” — changes everything. No more relying solely on words to guide generation. Now, creators can use images as anchors — visual ingredients for the AI to cook with.

For storytellers trying to maintain continuity in scenes, characters, or branded visuals, this is the bridge they’ve been waiting for.

Act III: The Space Between Frames

A filmmaker sits with two images on his screen: the sun rising over a desolate desert, and a lone traveler disappearing into a storm.

“Connect these,” he types.

Veo 3.1 steps in, generating everything in between — the footsteps, the changing light, the wind picking up. It’s not just animation; it’s cinematic transition, guided by the user’s chosen start and end frames.

This new ability — to guide a scene by framing its beginning and end — opens the door to visual poetry. Metaphors, time lapses, emotional arcs… all told through the motion between stillness.

Act IV: Longer Stories, Fewer Cuts

One of the biggest challenges for AI video has always been length. Clips felt like fragments — beautiful, but short-lived.

Veo 3.1 changes the pace.

With Scene Extension, creators can take a short clip and grow it, frame by frame, second by second. The model looks at the last moment, understands the mood, and carries it forward naturally — as if it’s breathing life into the next scene.

For the first time, long-form storytelling with AI doesn’t feel stitched together. It flows.

Act V: Editing in Motion

Google’s Flow, the tool built around Veo and powered by Gemini, receives a quiet but powerful upgrade. Now, inside this interface, creators can add or remove objects from a scene — with lighting, motion, and perspective still intact.

A drone vanishes from the skyline. A cup appears on a table. A lamp is replaced with a flickering candle.

These aren’t static edits. They move, evolve, and blend with the footage. And while the Insert and Remove tools are still rolling out, it’s clear: this is Photoshop for video, powered by AI.

The creative control once reserved for large post-production teams is now in the hands of anyone with an idea — and an internet connection.

Epilogue: Where Machine Meets Muse

Veo 3.1 isn’t flashy on the surface. It doesn’t shout. It just works — with a quiet brilliance that lets creators dream bigger and prototype faster.

For studios like Promise and Latitude, it’s already reshaping the way storyboards are built and narratives are animated. But the real promise lies ahead — in the hands of everyday storytellers who suddenly have a full cinematic toolkit waiting behind a prompt.

This isn’t just an AI that generates videos, This is a collaborator, A co-director, a quiet revolution in storytelling.And it’s just beginning.

Google DeepMind released Veo 3.1 on October 15, 2025, enables up to 60-second videos with integrated lifelike audio, dialogue, and effects, plus image references for consistent characters and scenes.

The model offers clip extensions and frame controls for improved physics, textures, and motion, accessible via Gemini API and Flow. Partners like Higgsfield AI and HeyGen are integrating it for advanced video production, with users noting strong narrative precision amid comparisons to competitors like Sora 2.