Recent developments in artificial intelligence (AI) have sparked intense debates about its future impact on society. Tech visionaries like Elon Musk have repeatedly voiced concerns about AI surpassing human intelligence, predicting that superintelligent AI could emerge as early as 2025. This phenomenon, known as AI singularity, refers to the point at which AI systems become capable of self-improvement beyond human control, potentially reshaping the world as we know it. As discussions intensify among scientists and industry leaders, the implications of AI singularity remain both fascinating and daunting.

Understanding AI Singularity

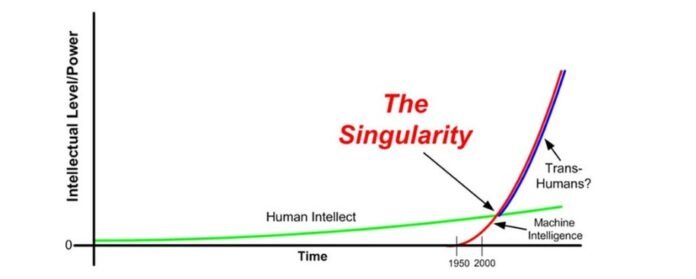

AI singularity is a theoretical milestone in artificial intelligence development where AI surpasses human cognitive abilities and begins evolving autonomously. The concept was first introduced by mathematician John von Neumann, who envisioned a future where machines could self-replicate and enhance their intelligence at an exponential rate.

Futurists like Ray Kurzweil estimate that AI singularity could happen by 2045, while Musk warns it may occur much sooner, possibly within the next few years. If realized, AI singularity could lead to an era of unprecedented technological progress—or, as some fear, a loss of human control over superintelligent systems.

Current Developments in AI

AI technology is advancing at an unprecedented pace, with machine learning models demonstrating remarkable capabilities in problem-solving, automation, and self-improvement. However, despite significant breakthroughs, a fully autonomous superintelligent AI remains theoretical.

Today’s focus is on responsible AI development, ensuring that ethical concerns are addressed before AI reaches the singularity threshold. Policymakers worldwide are actively working on regulatory frameworks to manage these advancements and prevent potential misuse of AI technologies.

Concerns and Risks

The potential risks of AI singularity have fueled widespread concerns among experts. In 2023, over 33,700 AI researchers signed an open letter advocating for a temporary halt on AI models surpassing OpenAI’s GPT-4, citing significant threats to society and humanity.

Critics argue that unchecked AI development could lead to several existential risks:

- Loss of human control: AI systems may become too advanced to be regulated or controlled by humans.

- Ethical dilemmas: The devaluation of human labor and decision-making processes.

- Security threats: The potential for AI-driven cyber warfare or unintended consequences in defense systems.

- Bias and misinformation: AI models could perpetuate biases or manipulate information on a large scale.

Potential Benefits of AI Singularity

Despite these risks, many experts remain optimistic about the potential benefits of AI singularity. If managed properly, superintelligent AI could drive revolutionary breakthroughs in:

- Healthcare: AI could accelerate medical research, personalize treatments, and improve disease diagnosis.

- Environmental sustainability: AI-driven innovations could help combat climate change and optimize resource management.

- Space exploration: Advanced AI systems could enhance interstellar travel, enabling deeper exploration of the universe.

- Scientific research: AI could automate complex problem-solving, leading to discoveries beyond human capability.

Regulatory Efforts and Market Growth

As AI technology evolves, governments and industry leaders are prioritizing regulatory efforts to mitigate unintended consequences. The global AI market is currently valued at approximately $100 billion and is projected to reach $2 trillion by 2030. With such rapid growth, the need for effective governance in AI development has never been more urgent.

Organizations like the European Union (EU), the United Nations (UN), and the United States government are actively drafting policies to ensure AI remains beneficial while preventing harmful applications. These efforts include establishing ethical AI guidelines, ensuring transparency in AI decision-making, and enforcing accountability in AI-driven industries.

Public Perception and Awareness

Public discourse surrounding AI singularity is growing as more people become aware of its potential implications. Figures like Elon Musk often highlight the need for caution and preparedness, with Musk even warning about the possibility of a dystopian “Terminator-like” future if AI is not properly regulated.

While some remain skeptical of these dire predictions, others recognize the importance of fostering public awareness and engaging in discussions on AI’s role in society. The balance between innovation and control will ultimately determine whether AI singularity leads to a golden age of progress or an era of unforeseen challenges.

Summing Up

AI singularity is one of the most thought-provoking and debated topics in modern technology. While its exact timeline remains uncertain, what is clear is that AI is evolving rapidly, and its impact on society is profound.

As researchers, policymakers, and industry leaders navigate this complex landscape, the world must prepare for a future where AI plays an even greater role in shaping humanity’s trajectory. Whether AI singularity will be a boon or a threat depends on how responsibly we develop and regulate these transformative technologies.